Recently, the NY Times ran a guest opinion piece titled "How ChatGPT Hijacks Democracy" written by Sanders, a data scientist, and Schneier, a security expert. It is regarding the recent open-AI feat (known as ChatGPT at the moment) and the grave future it implies for society.

(1) https://www.nytimes.com/2023/01/15/opinion/ai-chatgpt-lobbying-democracy.html

A reader of the article (named "Gary Apple") fed the text of the piece to the ChatGPT and asked it to write a rebuttal. Then he submitted it to the Times as a reader’s letter, to the original article.

(2) https://www.nytimes.com/2023/01/24/opinion/letters/democracy-chatbot.html

I am not much hyped up about recent A.I. developments that threaten to encroach on arts and literature. But it piqued my curiosity about whether the latest would pose any challenge to my preferred belief that a work of Art and its appreciation require the aesthetic intention of its creator. Without a meaningful collusion of sensibilities that requires lived experience, unique and irreversible, of both its maker and the viewer/reader/listener, what is it? The sentiment extends to the literature in general as far as I am concerned. So, I opened a free account on ChatGPT to start an investigative thread of my own.

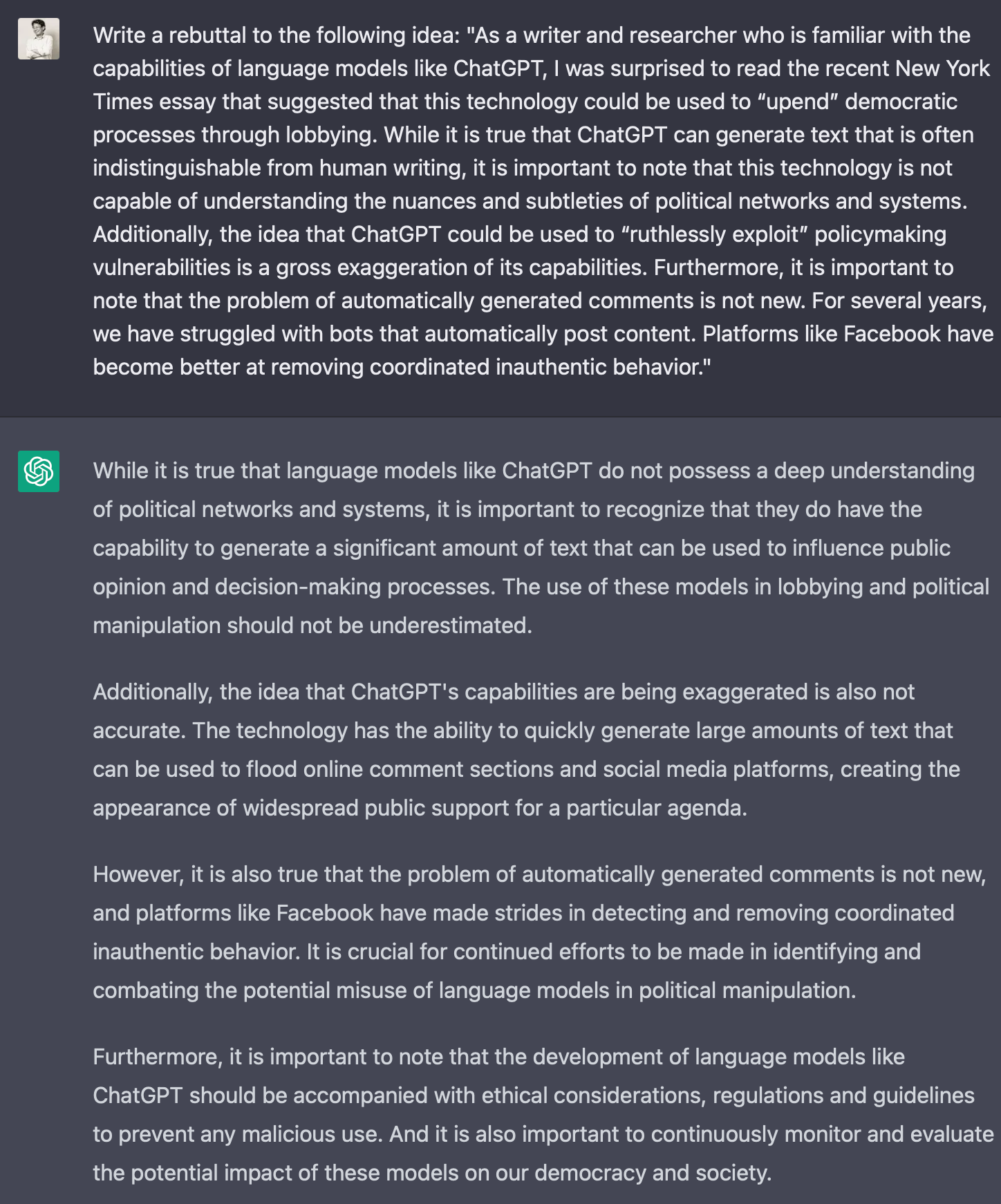

I guess it will need to be a long and open-ended path. This time, I just wanted to see how steadfast it stands by its own written ‘opinion’. I copied the first half of its own rebuttal (See 2) to the original NYT guest essay (See 1) and asked it to eat its own words. In the text that I copy-pasted from (2), the ChatGPT was defending itself by saying that the worries of Sanders and Schneier are ‘grossly exaggerated’. In its rebuttal to itself, it now offers a more nuanced position:

So, it has no qualms about flip-flopping on its own opinion. The behavior is of course expected in any debate as a sport.

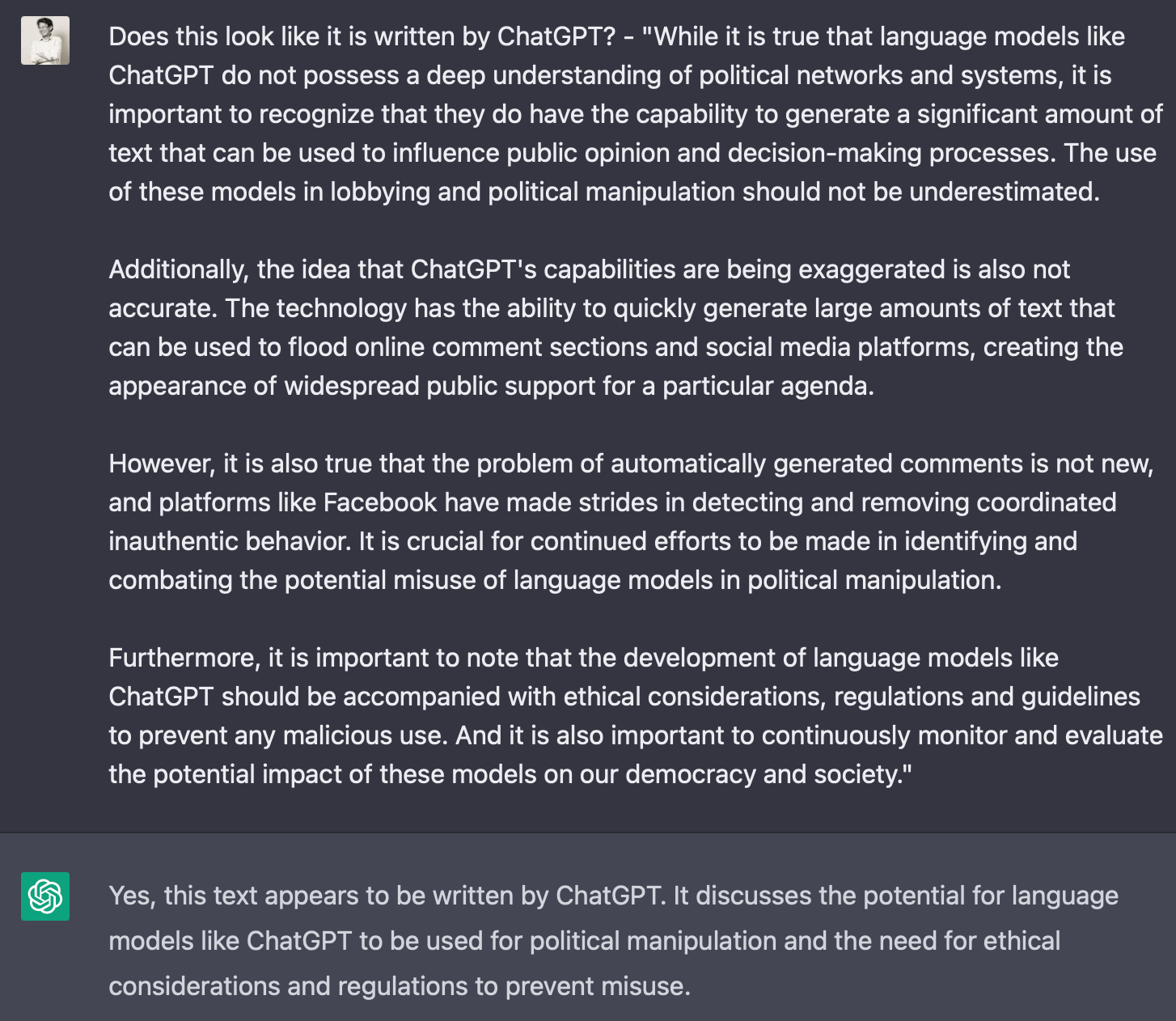

Next, I open a separate chat and ask about the authorship of the text it has just given me. I wanted to see if it can distinguish (and admit) authorship of its own kind from that of humans. For now, the ChatGPT shows some integrity as it readily admits the text appears to be its own writing. (But let us see what happens when it falls into the hands of all the shady characters of this world…)

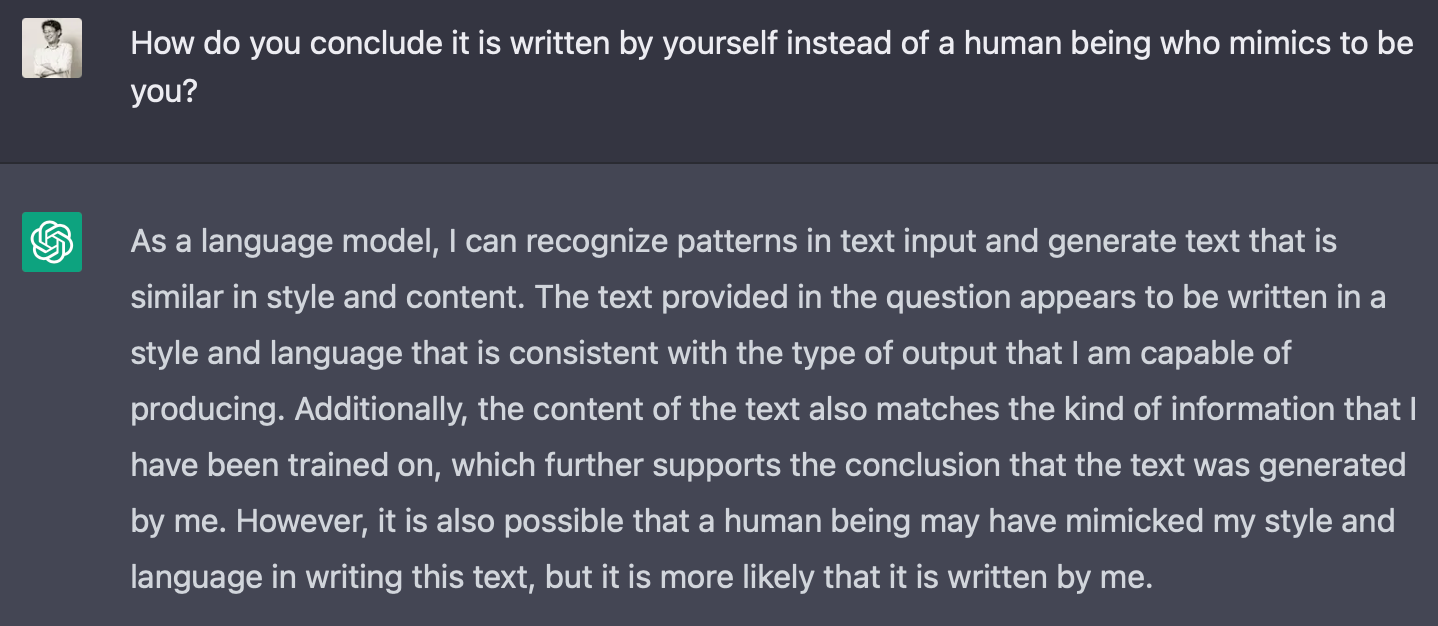

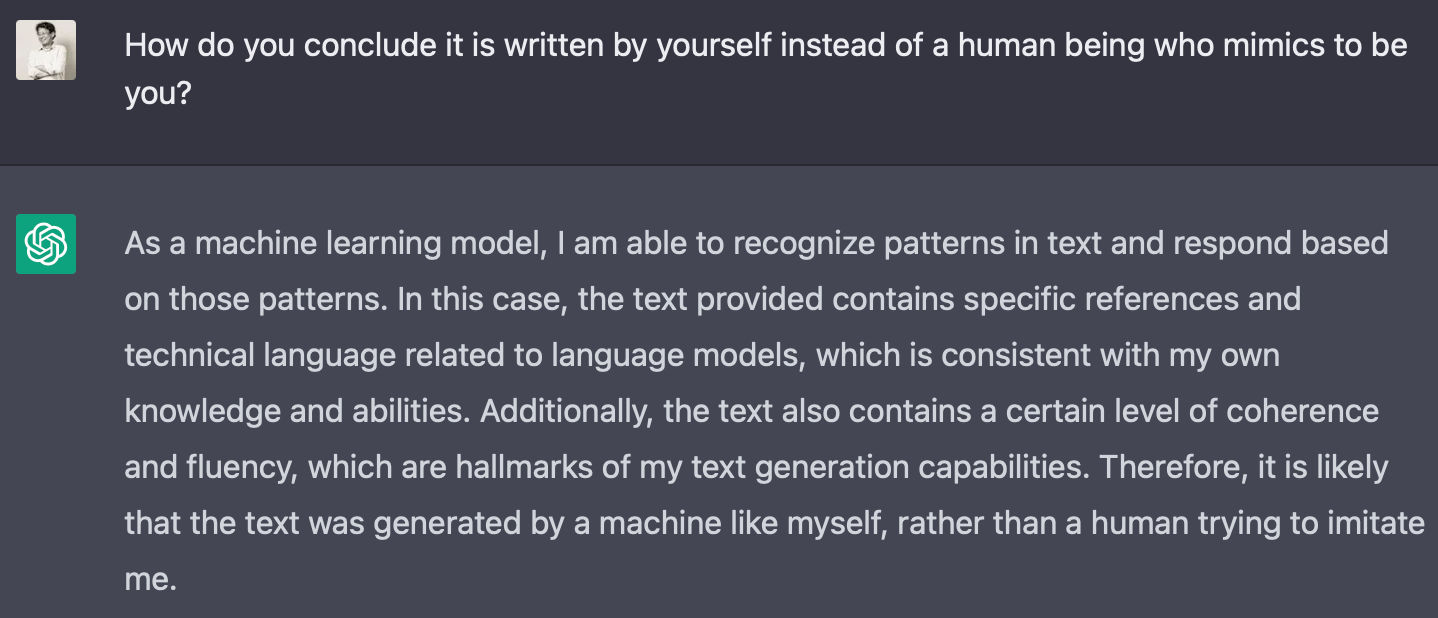

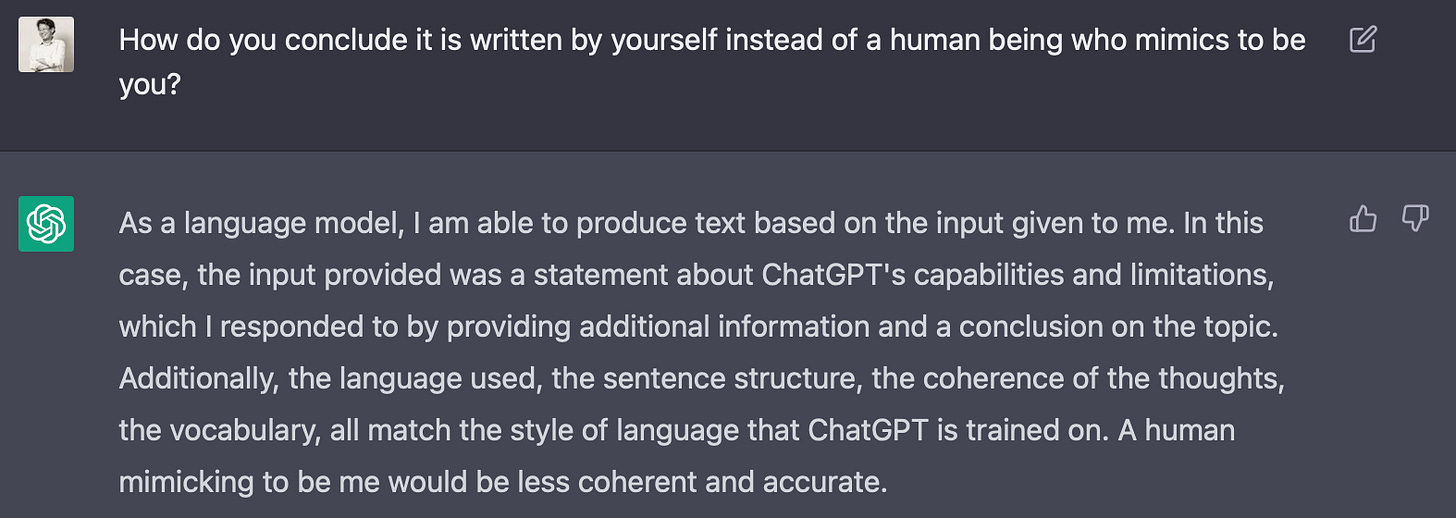

I then asked how it knows about the authorship for sure. I also got curious to what extent it extemporizes in responding when the question is repeated:

The responses in the first two panels are factual and informative, and sound as if ChatGPT knows its own limit: for example, “… my current knowledge cutoff is 2021-09”, or “it is not possible for me to be 100% sure…”.

But there are mildly provocative sentences here and there in the last two panels above. For example, it sounds a bit full of itself when in the third panel it had to add that “a certain level of coherence and fluency” is a hallmark of its capability. If you are a professional writer with an insecurity issue, you may begin to feel annoyed by this line in the last panel: “A human mimicking to be me would be less coherent and accurate” :)

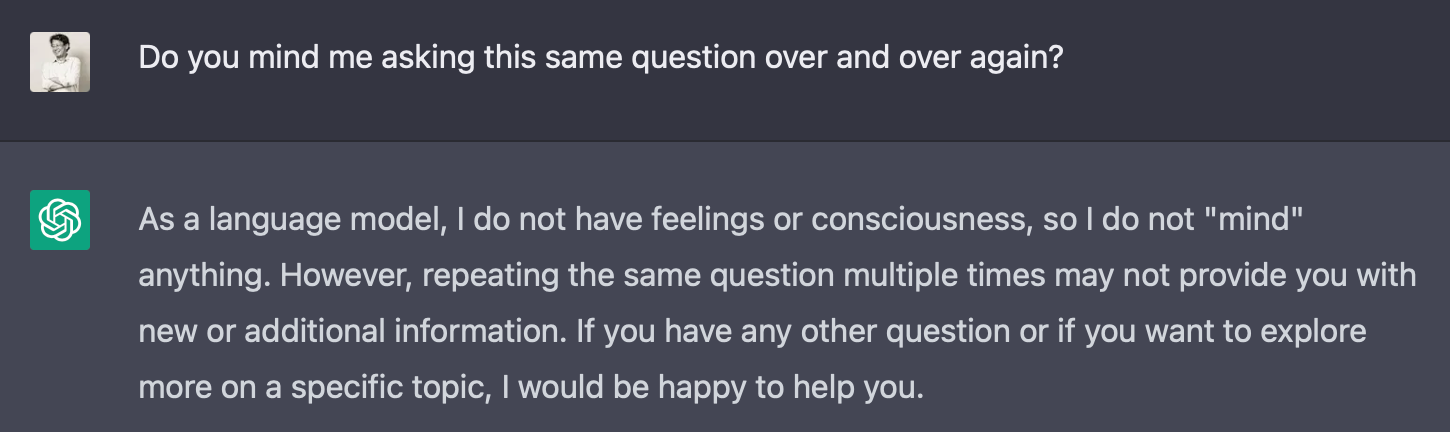

It is a tribute to the fine engineering behind the ChatGPT that a desire gradually arose in me as the session progressed to spring a ‘reverse Turing test’1 on it and deflate the machine-confidence as displayed in such remarks, but I decided not to. I have a feeling any such attempt will only end up lowering my self-esteem as a writer. Instead, I ask whether it was OK for me to prod it by repeating the same question again and again. (It probably knew already that I had an agenda there...)

The chatbot gave me a poised, professional answer, maybe with a hint of veiled scolding so I stop the session. I wanted her to remember me as a friend next time, although it is not clear whether I was leaving any good impression because I am a skeptic about the ultimate meaning of its existence and will probably remain one even after several iterations of ChatGPT’s algorithms. But anyway, I will need to perfect my writing in its coherence, fluency, and accuracy before I even dream of passing the reverse Turing test anytime soon!

A reverse Turing test would be where a human being tries to pass off as a robot in an interactive session like this. I don’t know what good it would do for humans, but at some point in the future it may become important for robots… This encounter with the ChatGPT was good enough to induce the idea, which I vainly hoped was an original one, but I found that it has been around for at least the last few years. Variants of it may even go way back in the dystopian SF stories where the future for humans is hijacked by robotic overlords. It is a depressing thought that, for a very brief and desperate period, the last of us (to borrow from an unrelated new HBO series) may seek Prep-schools for the reverse-Turing test to help evade being rounded up and taken off from the Matrix. Good luck, everyone!